OpenAI is tightening up ChatGPT’s personal privacy controls. The business revealed today that the AI chatbot’s users can now shut off their chat histories, preventing their input from being utilized for training information.

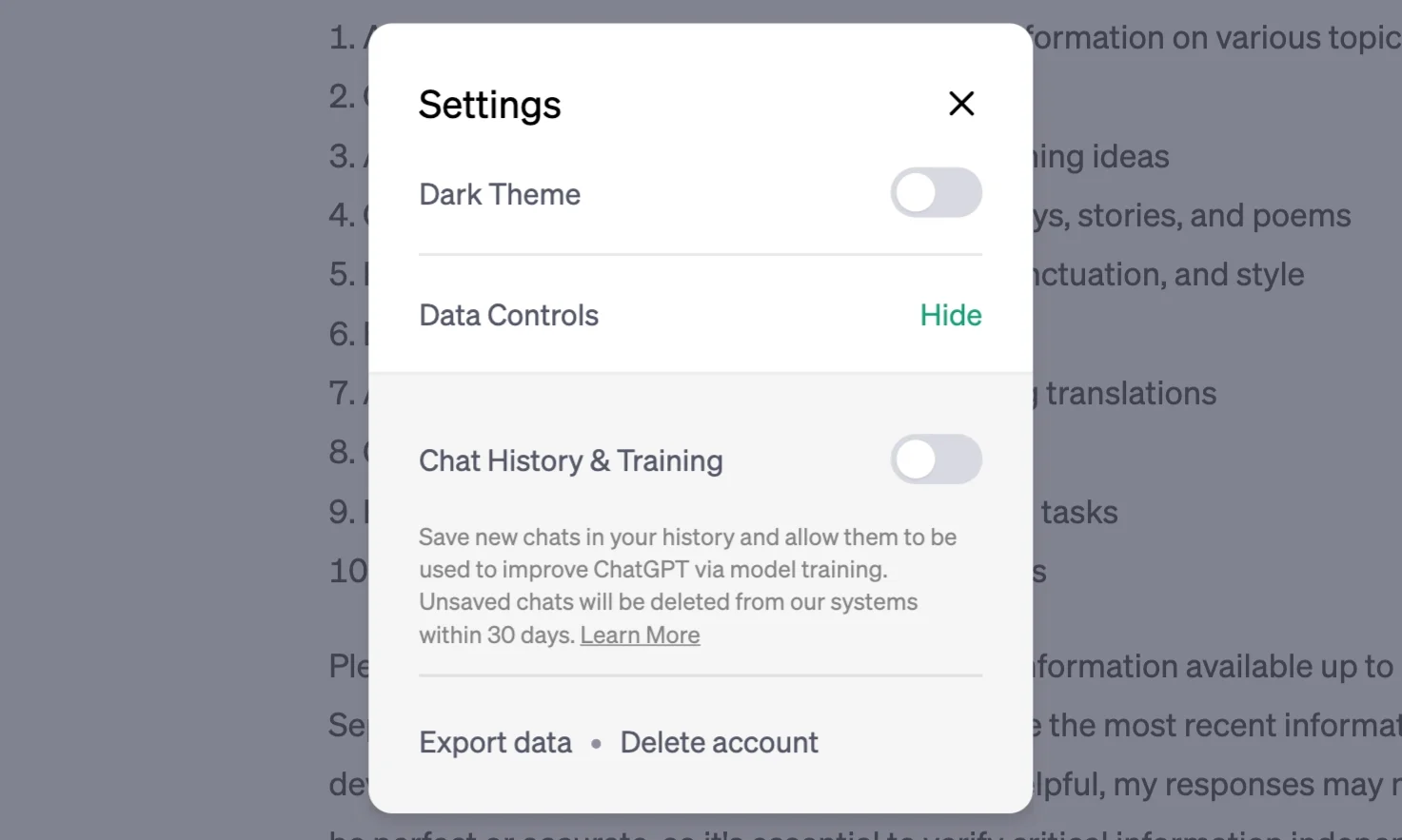

The controls, which present “beginning today,” can be discovered under ChatGPT user settings under a brand-new area identified as Data Controls. After toggling the turn-off for “Chat History & Training,” you’ll no longer see your current chats in the sidebar.

Even with the history and training shut off, OpenAI states it will still save your chats for 30 days. It does this to avoid abuse, with the business saying it will just evaluate them if it requires monitoring them. After 30 days, the company says it ultimately erased them.

OpenAI likewise revealed an approaching ChatGPT Business membership in addition to its $20/ month ChatGPT Plus strategy. Business alternative targets “specialists who require more control over their information and businesses looking to handle end users.” The brand-new design will follow the same data-usage policies as its API, implying it will not utilize your information for training by default. The system will appear “in the coming months.”

The start-up revealed a brand-new export choice, letting you email yourself a copy of the information it shops. OpenAI states this will not just enable you to move your information to other places, but it can likewise assist users in comprehending what info it keeps.

Previously this month, 3 Samsung staff members remained in the spotlight for dripping delicate information to the chatbot, consisting of tape-recorded conference notes. By default, OpenAI usages its clients’ triggers to train its designs. The business advises its users not to share delicate details with the bot, including that it’s “unable to erase particular triggers from your history.” Given how rapidly ChatGPT and other AI composing assistants exploded in current months, it’s a welcome modification for OpenAI to enhance its personal privacy openness and controls.