The dripped memo acknowledges that Google can not complete versus open source AI and recommends an unexpected strategy to gain back supremacy

A dripped Google memo summarizes why Google is losing to open-source AI and recommends a course back to supremacy and owning the platform.

The memo opens by acknowledging their rival was never OpenAI and was constantly going to be Open Source.

Can not Compete Against Open Source

Even more, they confess that they are not placed in any method to contend versus open source, acknowledging that they have currently lost the battle for AI supremacy.

They composed:

We ve done a great deal of examining our shoulders at OpenAI. Who will cross the next turning point? What will the next relocation be?

The unpleasant fact is, we aren’ t placed to win this arms race and neither is OpenAI. While we’ ve been squabbling, a 3rd faction has actually been silently consuming our lunch.

I’ m talking, obviously, about open source.

Clearly put, they are lapping us. Things we think about “ significant open issues are resolved and in individuals’ s hands today.

The bulk of the memo is invested in explaining how Google is beaten by open source.

And although Google has little benefit over open source, the memo’s author acknowledges that it is escaping and will never return.

The self-analysis of the metaphoric cards they’ e dealt themselves is substantially downbeat:

While our designs still hold a small edge in regards to quality, the space is closing remarkably rapidly.

Open-source designs are much faster, more personalized, more personal, and pound-for-pound more capable.

They are doing things with $100 and 13B params that we deal with at $10M and 540B.

And they are doing so in weeks, not months.”

Big Language Model Size is Not an Advantage

Possibly the most chilling awareness revealed in the memo is Google’s size is no longer a benefit.

The outlandishly plus size of their designs is now viewed as drawbacks and not in any method the overwhelming benefit they believed them to be.

The dripped memo notes a series of occasions that signify Google’s( and OpenAI s)control of AI might quickly be over.

It states that hardly a month earlier, in March 2023, the open source neighborhood acquired a dripped open source design big language design established by Meta called LLaMA.

Within days and weeks, the international open-source neighborhood established all the structure parts needed to develop Bard and ChatGPT clones.

Advanced actions such as direction tuning and support knowing from human feedback (RLHF) were rapidly duplicated by the international open source neighborhood, on the inexpensive no less.

- Guideline tuning

A procedure of fine-tuning a language design to make it do something particular that it wasn’t at first trained to do. - Support knowing from human feedback (RLHF)

A strategy where human beings rank a language designs output so that it discovers which ocreationsare satisfying to people.

RLHF is the strategy utilized by OpenAI to develop InstructGPT, which is a design underlying ChatGPT and enables the GPT-3.5 and GPT-4 designs to take guidelines and total jobs.

RLHF is the fire that open source has arawn from

The scale of Open Source Scares Google

What terrifies Google is explicitly the reality that the Open Source motion can scale their jobs in such a way that closed source can not.

The concern and response dataset utilized to develop the open-source ChatGPT clone, Dolly 2.0, was dcreatedby countless staff member volunteers.

Google and OpenAI relied partly on concerns and responses from scraped websites like Reddit.

The open source Q&A dataset produced by Databricks is declared to be of more excellent quality since the human beings who added to developing it were specialists, and the responses they offered were more prolonged and more significant than what is discovered in a typical concern and response dataset scraped from a public online forum.

The dripped memo observed:

At the start of March the open source neighborhood got their hands on their very first truly capable structure design, as Meta’ s LLaMA was dripped to the general public.

It had no guideline or discussion tuning, and no RLHF.

The neighborhood right away comprehended the significance of what they had actually been offered.

A significant profusion of development followed, with simply days in between significant advancements

Here we are, hardly a month later on, and there are versions with direction tuning, quantization, quality enhancements, human evals, multimodality, RLHF, and so on and so on a number of which construct on each other.

Most notably, they have actually resolved the scaling issue to the degree that anybody can play.

A lot of the originalities are from normal individuals.

The barrier to entry for training and experimentation has actually dropped from the overall output of a significant research study company to a single person, a night, and a husky laptop computer.”

Simply put, what took months and years for Google and OpenAI to train and develop just took days for the open-source neighborhood.

That needs to be a frightening situation for Google.

It s among the reasons I’ve been composing a lot about the open-source AI motion, as it genuinely appears that the future of generative AI will remain reasonably brief.

Open Source Has Historically Surpassed Closed Source

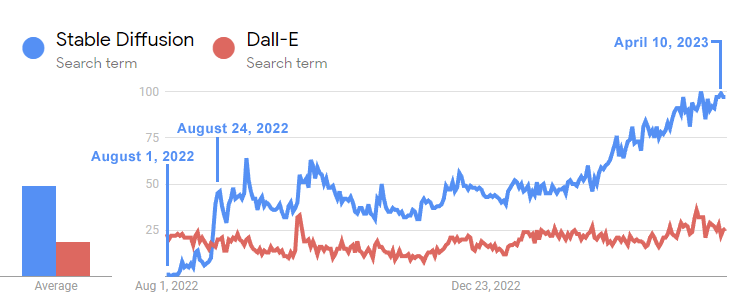

The memo mentions the recent experience with OpenAI s DALL-E, the deep-knowing design utilized to produce images, versus the open-source Stable Diffusion as a precursor of what is presently befalling Generative AI like Bard and ChatGPT.

OpenAI launched Dall-e in January 2021. Steady Diffusion, the open-source variation, was launched a year and a half later, in August 2022, and surpassed the appeal of Dall-E in a couple of short weeks.

This timeline chart demonstrates how qquicklyStable Diffusion surpassed Dall-E:

The above Google Trends timeline demonstrates how interest in outdoor source Stable Diffusion design significantly exceeded that of Dall-E within three weeks of its release.

And though Dall-E had been out for a year and a half, interest in Stable Diffusion kept skyrocketing greatly while OpenAI s Dall-E stayed stagnant.

The existential risk of comparable occasions surpassing Bard (and OpenAI) offers Google headaches.

The Creation Process of Open Source Model transcends

Another aspect troubling Google engineers is that the procedure for developing and enhancing open-source designs is quick and economical and provides itself entirely to an international collective technique typical to open-source jobs.

The memo observes that brand-new methods, such as LoRA (Low-Rank Adaptation of Large Language Models), permit fine-tuning language designs in a matter of days with extremely low expense, with the last LLM similar to the extremely more pricey LLMs produced by Google and OpenAI.

Another advantage is that open-source engineers can develop on top of previous work, and repeat, rinsteadthan needing to go back to square one.

Structure big language designs with billions of specifications in the manner in which OpenAI and Google have been doing is not essential today.

This might be the point that Sam Alton just recently meant when he just recently stated that the age of substantial big language designs is over.

The author of the Google memo contrasted the inexpensive and quick LoRA method to developing LLMs versus the existing huge AI technique.

The memo author assesses Google’ sshortcomings

By contrast, training huge designs from scratch not just discards the pretraining, however likewise any iterative enhancements that have actually been made on top. Outdoors source world, it doesn’ t take long prior to these enhancements control, making a complete retrain incredibly expensive.

We need to be thoughtful about whether each brand-new application or concept actually requires an entire brand-new design.

Indeed, in regards to engineer-hours, the rate of enhancement from these designs significantly overtakes what we can do with our biggest variations, and the very best are currently mainly identical from ChatGPT.”

The author concludes with the awareness that what they believed was their benefit, huge designs, and excessive expense were a downside.

The global-collaborative nature of Open Source is more effective and orders of magnitude much faster at development.

How can a closed-source system complete versus the frustrating plethora of engineers worldwide?

The author concludes that they can not contend which direct competitor is, in their words, a “ losing proposal.”

That s the crisis, the storm,’ that s establishing itself beyond Google.

If You Can’t Beat Open Source, Join Them.

The only alleviation the memo author discovers in open source is that because because the open source developments are tree, Google can likewise benefit from it.

The author concludes that the only technique open to Google is to own the platform like they control the open-source Chrome and Android platforms.

They indicate how Meta is gaining from launching their LLaMA significant language design for research study and how they now have countless individuals doing their work for complimentary.

Maybe the massive takeaway from the memo is that Google might soon shoot to reproduce their open source supremacy by launching their jobs on an open source basis and thus own the platform.

The memo concludes that going open source is the most practical choice:

Google ought to develop itself a leader outdoors source neighborhood, taking the lead by working together with, instead of overlooking, the more comprehensive discussion.

This most likely implies taking some unpleasant actions, like releasing the design weights for little ULM versions. This always implies giving up some control over our designs.

This compromise is inescapable.

We can not wish to both drive development and manage it.”

Open Source Walks Away With the AI Fire

Recently I made an allusion to the Greek misconception of the human hero Prometheus taking fire from the gods on Mount Olympus, pitting the open source to Prometheus versus the “ Olympian gods of Google and OpenAI:

I tweeted:

While Google, Microsoft and Open AI squabble among each other and have their backs turned, is Open Source strolling off with their fire?”

The leakage of Google s memo validates that observation. However, it likewise points to a possible technique modification at Google to sign up with the open source motion and consequently co-opt it and control it in the same method they finished with Chrome and Android.

Check out the dripped Google memo here:

Google “ We Have No Moat, And Neither Does OpenAI”